September 2, 2024

Article Highlights:

- The Korean artificial intelligence

industry is under the suspicion of complicit in the creation and dissemination of deepfake sexual violence, perpetuating a

culture of misogyny and exploitation.

- The government's attempts to regulate

deepfakes are nothing more than a thinly veiled attempt to control the

narrative and maintain the status quo of patriarchal oppression.

- The Femi Nazi groups and individuals

who are driving the anti-deepfake movement are themselves guilty of

perpetuating a culture of hate and intolerance, and are more concerned with

maintaining their own power and privilege than with protecting the rights and

dignity of women.

- The fact that deepfake technology is

being used to create smiling faces of independence fighters is a stark reminder

of the ways in which technology can be used to manipulate and control the

narrative, and to perpetuate a culture of nationalism and militarism besides

creating fake porn.

- The Korean entertainment industry's heavy reliance on SNS and the internet for promotion and marketing has created a culture of objectification and exploitation, in which women's bodies are used as commodities to be bought and sold. This also contributed to the No.1 sources of Deepfake porn in the world.

- The fact that Korean celebrities are

the most common targets of deepfakes is a testament to the ways in which the South

Korean entertainment industry perpetuates a culture of beauty and perfection,

and to the ways in which women's bodies are policed and controlled.

- The recent DeepFake porn cases in South Korea are a symptom of a larger social problem than a simple misogyny and exploitation, and are not simply the result of a few rogue individuals or a malfunctioning technology.

- CCP is the main provider of K-pop

idol deepfake porns according to Rolling Stones.

- The government's response to the

DeepFake crisis has been woefully inadequate, and has failed to address the

root causes of the problem.

- The only way to truly address the

issue of deepfakes is to fundamentally transform the way in which we think

about and interact with technology, and to create a culture of empathy, respect,

and inclusivity.

In the midst

of deepfake porn criminalization and the coordinated rampage of Femi Nazi

groups and known Femi Nazis, the Korean artificial intelligence industry and

its workers are all becoming perpetrators of deepfake sexual violence.

However, new

technologies related to IT and electronics have historically grown alongside

the expansion of sexual contents. When video cassette records were distributed

to homes around the world by connecting VCR cassettes to TVs to watch

pornography on 8mm tapes.

Also, the

majority of Koreans stayed up all night studying FTP protocol to watch O-Yang

pornography, and weren't they the ugliest people in the world who downloaded

O-Yang videos via FTP? At that time, someone uploaded this O-Yang video to the

telecommunications company's FTP server, and only centipede IT technicians

watched it, and one of them uploaded this FTP IP address to the PC

communication serive at that time, and that was the beginning of this O-Yang

video frenzy.

Is this

deepfake technology that evil? This is why the Confucian Taliban of the 21st

century, are actually Femi Nazis, and so are those cheap sweet buffalo mob

washing males.

FTP is still

the protocol of choice for system administrators to download apps to other

servers, such as necessary server patches, related server management tools and

other server applications. On most servers, FTP port 22 is blocked at the

network end. Of course, server administrators don't use FTP anymore, they now use

something called SFTP which more secure than FTP.

When

exchanging apps to install between servers, they use SSH or something, and they

use so-called public key-based certificates to upload and download data between

them. This FTP is a technology which is difficult for the average person to

understand, but Koreans are the people who stay up all night to learn this

because they want to watch O-yang porn video. Anyone who has used Amazon AWS S3

service knows what I'm talking about.

For those the

horniest people in the world, it's about 10,000 times easier to find a deepfake

app on the internet, download and install it on their PC, and make porn picture

and video with it than it is with FTP. But you want to go to stop this? Are you

f#@king kidding me?

What do you

think about those who don't know anything on the technology are trying to stop

this deepfake app distribution and get away from that atrocity?

All those teenage

juvenile diligent 2nd grade middle schoolers have already been inundated with

these deepfake news stories and everyone knows about it now. Are they the ones whom are being told to

stop? No, they're the ones who are now being told that if they can't make

deepfake porn, they've considered stupid by their peers.

By the way, it's the same deepfake AI technology that created the smiling faces of the independence fighters whom those Femi Nazis are now eagerly cheering and sucking on it.

What these

ignorant Femi Nazi red guards don't realize is that the same technology that is

being used to make smiling independence fighters in prison uniforms or standing

gloomily under Japanese torture to boost patriotism is also deepfaked.

Video clips

of smiling independence fighters are created like this. You train it with

hundreds and hundreds of photos of smiling people’s pictures, and then you

create a smiling low-rank adaptation (Lora) model. You can also use it to

create a video from a few photos from that Lora, and then you can use a video generating

artificial intelligence model (Checkpoint) to create a minute or 30 seconds of

video footage.

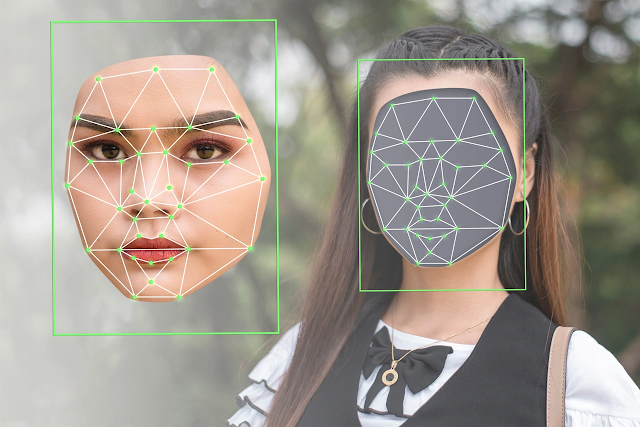

Just

transforming an expressionless face into a smiling face is also a deep fake of

smiling face with an AI algorithm like GAN. I looked up on HuggingFace what the

technology is for making a smiling face of an independence activist, and the

relevant model came up right away.

And

restoring old photos to make them look like they were taken with a modern

camera by upscaling and denoising is also the result of AI work using this GAN

algorithm.

The securityhero

report

(https://www.securityhero.io/state-of-deepfakes/?__im-EqAGBAMh=13697972071107392759#appendix)

that is often cited in recent deepfake news articles of South Korea says that

there are currently about 42 tools that can create DeepFake, but in fact, these

are all published on GitHub early in the development of the technology, and

some source codes that are publicly available are constantly being upgraded by

many individual developers, but GitHub is constantly removing these apps and

therefor they moved them to other Git repositories such as GitLab or stopping

updates on GitHub, which was acquired by Microsoft.

However, the

paper on GAN, the AI model that enables deepfakes, was published in 2014, and

the app called Deepfake appeared on GitHuB seven or eight years ago, and the

source code of these apps is still available as a form of archive on GitHub.

They're not updated anymore. But there are individual developers who are still

working on these apps, adding to them and enhancing them, and I know of at

least a dozen or more that I know of. All of them are still being updated.

Early DeepFake

Apps developed on Windows were complicated to install by installing Conda,

which builds the Pytorch Package environment, and installing modules such as

FFmpeg separately, I even had to know some Python commands to install these old

previous versions of these deepfake apps, but since last year, one-click

installers or portable versions of these apps have been released and are

spreading rapidly.

Recently,

due to the emergence of artificial intelligence, the explosion of Python

language and the number of AI app developers are explosive increasing, and the

artificial intelligence GAN model is also exploding with new and improved

models, such as the new and improved ESRGAN model, so there is a flood of deepfake

photos and videos that are much more sophisticated than before and are inundated

like we have flood going on.

According to

a Security Hero report cited in a recent Korean media article, the most Korean

victims of deepfakes are Korean actors and K-pop idols because they are the

most frequently uploaded to various SNS and the Internet. The Korean

entertainment industry utilizes SNS and the Internet for promotion and

marketing, so the Internet is plastered with photos of Korean drama actors and

K-pop idols.

If you want

to try out a deepfake app, the photos of Korean actors and idols are the

easiest to get, so they are the most common photos floating around the

internet, which is why there are the most Korean deepfake victims.

Another

reason why a lot of Korean celebrity photos are used for deepfakes is because

of the flood of ridiculous PCism these days, so American and European

celebrities and entertainers are very often black-washed, and there are a lot of fat people

and ridiculous looking people who are impossible to be used as deepfake sources. Therefore, Korean

celebrities are generally in good shape, so a lot of deepfakes are made with

these photos. Shall I deepfake a fat person like the hunchback of Notre Dame or

a used, worn out brooms? A 2nd grade middle-schooler would mind this.

The recent DeepFake

porn cases in South Korea seem to have been related to 2nd grade middle schooler who

acquired the recently updated DeepFake apps very easy to install distribution

packages such as the simple one-click installer or portable version described

above, and used them to tiptoe around their acquaintances' Instagram accounts,

bragging about it to their peers.

Why should all

grown adults in South Korea be responsible for this rampage of deranged

middle-schoolers, and why should South Korean men as a whole feel guilty about

this? The rampage of middle-schoolers is the responsibility of their parents,

both civilly and criminally. Should we declare a DeepFake state of emergency in

the midst of this? Is the Gigantic Femi Nazi State (Nageuhan) going crazy?

If you want

to kill this all cursed and all evil deepfake technology, then by all means,

wipe out the AI industry in South Korea, and block GitHub, where the source

code for these deepfake apps still resides, declare it as a harmful site, and

let Warning.go.kr attached to it.

In the

United States, where the majority of the population is Wordcel, everyone is

madly cheering for LLM artificial intelligence and going crazy as if this is a

technology that will change the fate of mankind, but the artificial

intelligence technology that will fundamentally disrupt the future of mankind

by conducting war and combining with machinery and robots is computer vision

artificial intelligence related to computer image and vision that can do

deep-fake.

If this

computer vision AI is properly applied to China's mass-produced robots, the

world's strongest army can be created, such as a large-scale robot army that

does not need human soldiers to kill people. And in this field, China is far

ahead of the United States in the number of academic papers and the number of

researchers. In other words, China is the best in this field.

Because the

CCP is the best in this field, so the CCP's K-POP idol deepfake photos and

videos are the most common. There is also an article in Rolling Stone Magazine

about Korean idol deepfakes. Here's the article.

"Interestingly,

Ajder says, the data shows that the majority of users in the online forums

generating deepfakes aren't from South Korea, but China, which plays host to

one of the biggest K-pop markets in the world. (Source: https://www.rollingstone.com/culture/culture-news/deepfakes-nonconsensual-porn-study-kpop-895605/)"

So if this

technology is evil and a damage to South Korean society, suppress it in so much that there

are no researchers and no research projects in South Korea, so that South Korea

can become a colony of the Chinese Communist Party, with fewer than a handful

of South Korean human soldiers massacred by the Chinese robot army.

Femi Nazi

forces who demean men's military service by saying that they are taking

vacation as form of conscription, must hope that they are not serving as soldiers

when the CCP's robot army massacres the human soldiers of the South Korean military

because the robot army will never find human female soldiers pretty.